Science, in general, consists of two steps performed in a loop: Pick something to study; Study it; Pick next thing to study.

Blog Extreme Sciencing is focused on the second term of this process: how exactly do we perform the study? Is there better approaches to science process? Can we borrow techniques from other fields of human experience?

The first question is very important as well. There are roughly two approaches to finding something to investigate, especially at the beginning of the career:

- Postmodern approach: “Listen to the conversation” and decide what is missing or wrong, and fix it, thus moving conversation forward

- Artistic approach: wait for enlightenment from the higher power to show you the path, and follow that path thus being untethered from mainstream that can be wrong or a fashion

This is a philosophical distinction, and thus there cannot be “the best” approach. But that distinction is critical to understanding modern problems of science.

The first approach (where we read the current literature, listen to scientists, and figure out what is missing) has at least two problems. First, the “conversation” can be extremely noisy, or just plain wrong. People still can’t explain exactly how they performed the experiments and provide enough data for replication! So when you read paper about something being correct or wrong, this paper often cannot be trusted. Accumulation of noisy information can provide more reliable picture, but with ultra-specialization of science there might not be enough samples for averaging. Secondly, the conversation can be about something shitty, like Deep Fakes or other bad applications of AI. As Harvard CS professor James Mickens says: “If you are a PhD student studying Deep Fakes – drop out now. Your work will not make society better, it will only be used for revenge porn and disinformation“. And AI is today empowered with infinite applications, including some that perhaps shouldn’t exist such as in the US justice system.

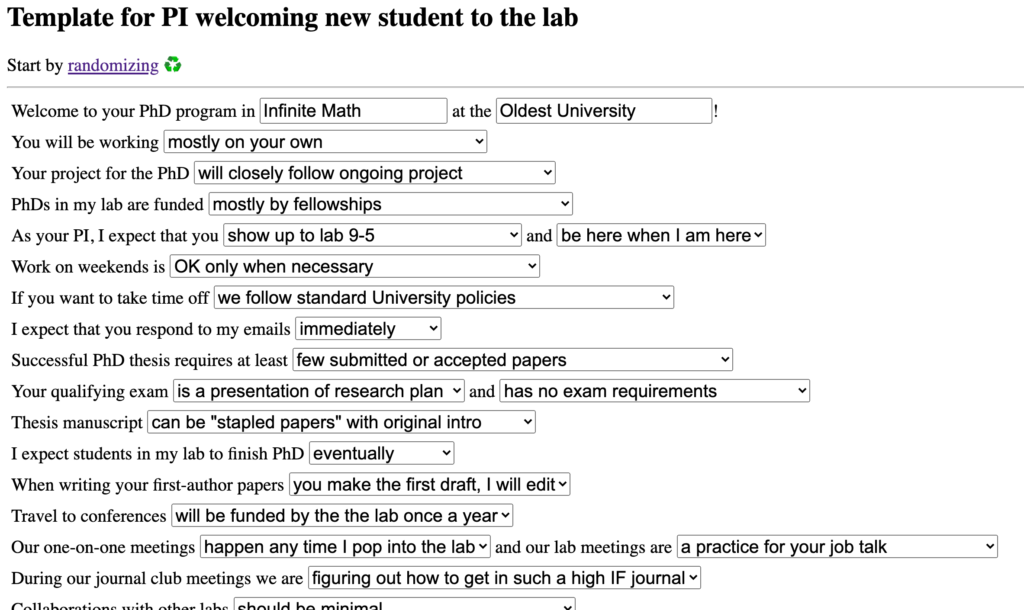

The problem with the second approach to finding scientific question – believing in higher Truth and being guided by some external power – is that it is too easy to become untethered from reality. Not only does it create an opportunity for pseudo-science and general crankery, but it also creates unhealthy balance of power. How many time have you heard “You are working in science, you can’t do it for money!” or other appeal to passion for the question? When accepting existence of the higher power it is possible to also forget about human dignity and that we have to be serving ourselves first and foremost.

In conclusion, modern approach to picking scientific questions should combine reliance on existing literature and discussions of what is important, and also some filter to be able to highlight what has potential to benefit society, and what can be potentially harmful.