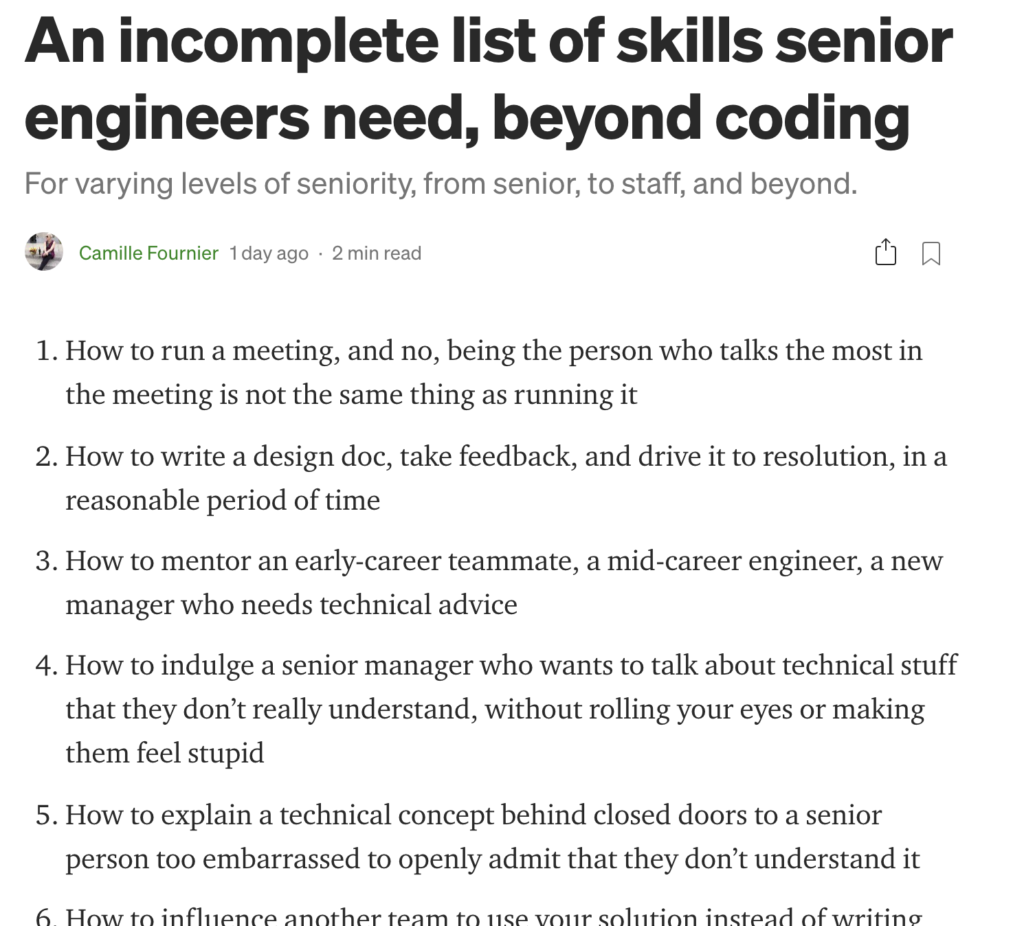

Wonderful example of transparency about academic hiring process in a few tweets:

It's tenure-track biomedical academic search season and "chalk talks" are right around the corner. Chalk talks are not something we "teach" our trainees and most postdocs are unprepared for them as a result.

— Leslie Vosshall PhD (@leslievosshall) October 26, 2021

Here are my Chalk Talk Pro Tips:https://t.co/nueGEsD3V7

So we are about to have our 6 junior faculty candidates begin to come in for in person interviews. I sent them this email below to give them an idea of what to expect, and some info/tips for the interview. One of the most important parts of the interview is the chalk talk 1/ pic.twitter.com/4u94CdxamM

— Ben Garcia (@GarciaLabMS) January 5, 2022

14 yrs ago, I was negotiating my startup offer w/ @USCViterbi. Below is a compilation of advice that I received & that I wish I had received. But, at the end of the day, remember: a school is making an investment in you. They want you to be successful. #advice @FuturePI_Slack pic.twitter.com/curbg5LZ36

— Prof. Andrea Armani (@ProfArmani) March 5, 2022

Strategies for preparing for your dissertation defense from our #SecondSunday doctoral writing group:

— P. Shannon-Baker (@PShannonBaker) March 13, 2022

– Remember you are the expert. Committee members know a small part of your study, but YOU are the expert.

– Practice your presentation multiples with friends. Have them ask…

A student who is coming to interview in one of our graduate programs asked me for advice about interviews. So, here is my guidance, in case it's useful. Hopefully others will add their own.

— Max Heiman (@maxwellheiman) December 20, 2021

Well, it's fully into #GRFP results season. If your proposal got funded, congratulations! That's a great achievement and you should be proud.

— Dr. James Lamsdell (@FossilDetective) April 4, 2022

If your proposal wasn't funded, I know it's disappointing, but it's a disappointment many share, so here's a 🧵reminding that:

[1/7]

So I recently finished reviewing a lot of graduate fellowships. Holy moly do we have an amazing talent pool in STEM.

— Amy Orsborn, PhD 👩🔬🐵 (@neuroamyo) January 18, 2021

A few thoughts* on what made applications stand-out to me. The gist: tell your story and keep it simple.

*one random person's opinion; ymmv. (1/10)

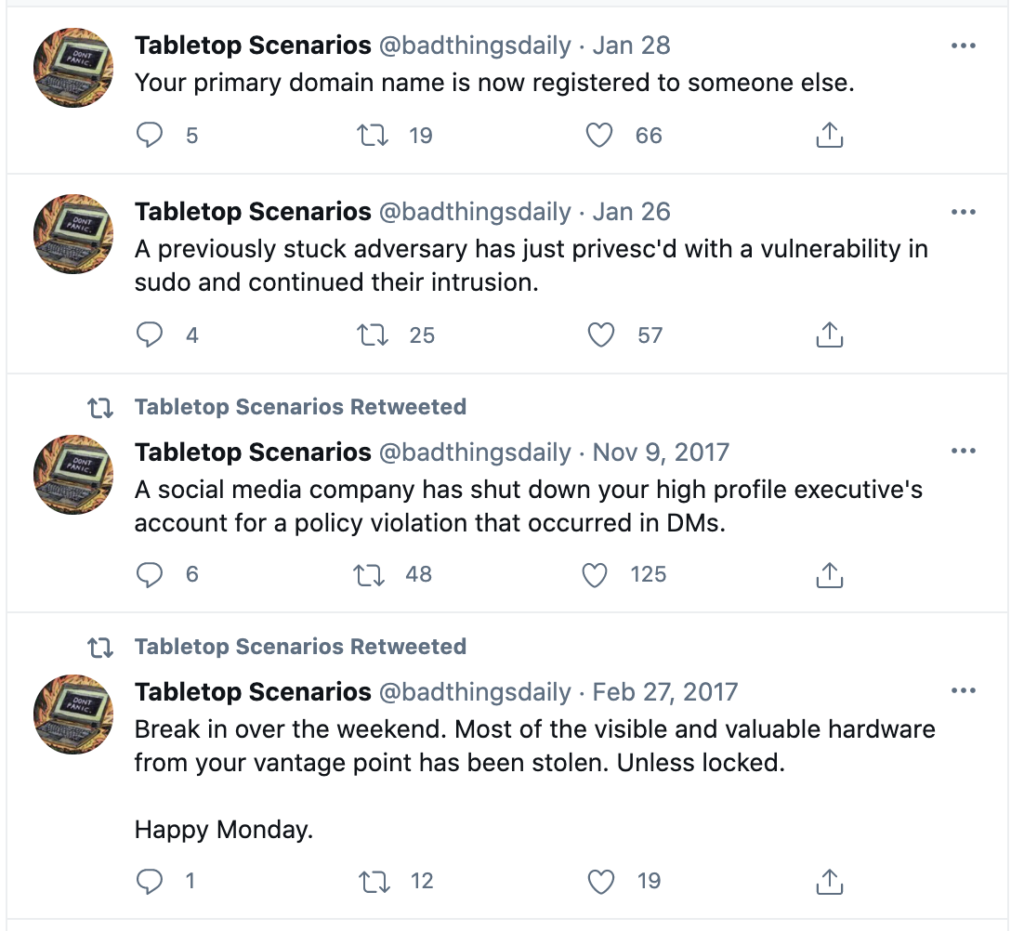

Workplace culture explained – P2 🧵

— Callum Stephen (He/Him) (@AutisticCallum_) April 29, 2022

1. It’s considered rude to decline work social events, even if you have a good excuse. A diplomatic way to avoid them is to agree to go, then cancel at the last minute. People care more about you seeming keen to go than you actually attending.

[long thread] Advice for applying to and interviewing for assistant-professor positions.

— Mikhail Kats (@mickeykats) May 17, 2022

Disclaimers:

* Based on my field [physical sciences / engineering] and either US or countries with similar systems

* Advice aimed more at R1s rather than R2s or teaching positions..

Here's a lil thread of things I *wish* somebody had told me when I started advising STEM grad students as a lab head, PhD program director, & conflict-resolution officer. 🧵 pic.twitter.com/3YzFq9Lhi2

— scott barolo, phd (@sbarolo) June 22, 2022

I've been hired for 2 tenure track jobs and been on multiple committees, sent in more than 100 job applications, and done multiple interviews. Here is my thread 🛢

— David Webster (@dwebsterhist) August 6, 2022

of job market advice for early career academics based on decades of experience:

1. Get lucky.